AMD is all set to unveil its next-generation CDNA GPU-based Instinct MI100 accelerator on the 16th of November as per tech outlet, Aroged. The information comes from leaked documents which are part of the embargoed datasheets for its next-generation data center and HPC accelerators lineup.

AMD Instinct MI100 With First-Generation CDNA Architecture Launches on 16th November – Aims To Tackle NVIDIA’s A100 In The Data Center Segment With Fastest Double Precision Power

The AMD Instinct MI100 accelerator was confirmed by AMD’s CTO, Mark Papermaster, almost 5 months ago. Back then, Mark stated that they will be introducing the cDNA based Instinct GPU by the second half of 2020. Since we approach end of year, it looks like AMD is now all set to launch the most powerful data center GPU it has ever built under the leadership of RTG’s new chief, David Wang.

AMD’s Radeon Instinct MI100 will be utilizing the CDNA architecture which is entirely different than the RDNA architecture that gamers will have access to later this month. The CDNA architecture has been designed specifically for the HPC segment and will be pitted against NVIDIA’s Ampere A100 & similar accelerator cards.

ased on what we have learned from various prototype leaks, the Radeon Instinct MI100 ‘Arcturus’ GPU will feature several variants. The flagship variant goes in the D34303 SKU which makes use of the XL variant. The info for this part is based on a test board so it is likely that final specifications would not be the same but here are the key points:

- Based on Arcturus GPUs (1st Gen cDNA)

- Test Board has a TDP of 200W (Final variants ~300-350W)

- Up To 32 GB HBM2e Memory

Specifications that were previously leaked by AdoredTV suggest that the AMD Instinct MI100 will feature 34 TFLOPs of FP32 compute per GPU. Each Radeon Instinct MI100 GPU will have a TDP of 300W. Each GPU will feature 32 GB of HBM2e memory which should pump out 1.225 TB/s of total bandwidth.

As per the embargo, the AMD Instinct MI100 HPC accelerator will be unveiled on 16th November at 8 AM CDT.

AMD Radeon Instinct Accelerators 2020

| Accelerator Name | AMD Radeon Instinct MI6 | AMD Radeon Instinct MI8 | AMD Radeon Instinct MI25 | AMD Radeon Instinct MI50 | AMD Radeon Instinct MI60 | AMD Radeon Instinct MI100 |

|---|---|---|---|---|---|---|

| GPU Architecture | Polaris 10 | Fiji XT | Vega 10 | Vega 20 | Vega 20 | Arcturus |

| GPU Process Node | 14nm FinFET | 28nm | 14nm FinFET | 7nm FinFET | 7nm FinFET | 7nm FinFET |

| GPU Cores | 2304 | 4096 | 4096 | 3840 | 4096 | 7680 |

| GPU Clock Speed | 1237 MHz | 1000 MHz | 1500 MHz | 1725 MHz | 1800 MHz | ~1500 MHz |

| FP16 Compute | 5.7 TFLOPs | 8.2 TFLOPs | 24.6 TFLOPs | 26.5 TFLOPs | 29.5 TFLOPs | 185 TFLOPs |

| FP32 Compute | 5.7 TFLOPs | 8.2 TFLOPs | 12.3 TFLOPs | 13.3 TFLOPs | 14.7 TFLOPs | 23.1 TFLOPs |

| FP64 Compute | 384 GFLOPs | 512 GFLOPs | 768 GFLOPs | 6.6 TFLOPs | 7.4 TFLOPs | 11.5 TFLOPs |

| VRAM | 16 GB GDDR5 | 4 GB HBM1 | 16 GB HBM2 | 16 GB HBM2 | 32 GB HBM2 | 32 GB HBM2 |

| Memory Clock | 1750 MHz | 500 MHz | 945 MHz | 1000 MHz | 1000 MHz | 1200 MHz |

| Memory Bus | 256-bit bus | 4096-bit bus | 2048-bit bus | 4096-bit bus | 4096-bit bus | 4096-bit bus |

| Memory Bandwidth | 224 GB/s | 512 GB/s | 484 GB/s | 1 TB/s | 1 TB/s | 1.23 TB/s |

| Form Factor | Single Slot, Full Length | Dual Slot, Half Length | Dual Slot, Full Length | Dual Slot, Full Length | Dual Slot, Full Length | Dual Slot, Full Length |

| Cooling | Passive Cooling | Passive Cooling | Passive Cooling | Passive Cooling | Passive Cooling | Passive Cooling |

| TDP | 150W | 175W | 300W | 300W | 300W | 300W |

AMD’s Radeon Instinct MI100 ‘CDNA GPU’ Performance Numbers, An FP32 Powerhouse In The Making?

In terms of performance, the AMD Radeon Instinct MI100 was compared to the NVIDIA Volta V100 and the NVIDIA Ampere A100 GPU accelerators. Interestingly, the slides mention a 300W Ampere A100 accelerator although no such configuration exists which means that these slides are based on a hypothesized A100 configuration rather than an actual variant which comes in two flavors, the 400W config in the SXM form factor and the 250W config which comes in the PCIe form factor.

As per the benchmarks, the Radeon Instinct MI100 delivers around 13% better FP32 performance versus the Ampere A100 and over 2x performance increase versus the Volta V100 GPUs. The perf to value ratio is also compared with the MI100 offering around 2.4x better value compared to the V100S and 50% better value than the Ampere A100. It is also shown that the performance scaling is near-linear even with up to 32 GPU configurations in Resenet which is quite impressive.

AMD Radeon Instinct MI100 vs NVIDIA’s Ampere A100 HPC Accelerator (Image Credits: AdoredTV):

With that said, the slides also mention that AMD will offer much better performance and value in three specific segments which include Oil & Gas, Academia, and HPC & Machine Learning. In the rest of the HPC workloads such as AI & data analytics, NVIDIA will offer much superior performance with its A100 accelerator. NVIDIA also holds the benefit of Multi-Instance GPU architecture over AMD. The performance metrics show 2.5x better FP64 performance, 2x better FP16 performance, and twice the tensor performance thanks to the latest gen Tensor cores on the Ampere A100 GPU.

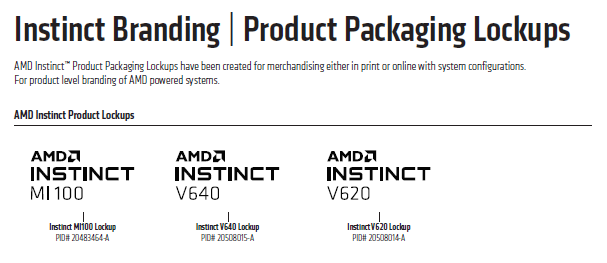

In addition to the Instinct MI100, AMD is also going to introduce its Instinct V640 and V620 GPU accelerators. Specifications of these products are not known yet but we will learn more on them soon.

AMD has proved that they can offer more FLOPs at a competitive price so maybe that is where Arcturus would be targetting. With 2H 2020 now set for the Radeon Instinct MI100, we will soon know how AMD’s latest CDNA architecture competes against NVIDIA’s Ampere based A100 GPU accelerator in the HPC segment.

More Stories

AMD Radeon RX 6800 XT “Big Navi” GPU Alleged 3DMark Benchmarks Leaked – Faster Than GeForce RTX 3080 at 4K, Slower In Port Royal Ray Tracing

AMD Ryzen 7 5800H 8 Core & 16 Thread Cezanne ‘Zen 3’ High-Performance CPU Shows Up, Early ES Chip With 3.2 GHz Clocks

BitFenix Announces Two New Cases, The Nova Mesh SE and the Nova Mesh SE TG